Using DALL-E 2 for Interior Design

With advancements in AI-generated images happening so quickly these days, this post will probably be outdated the moment it’s published. But I figured it could be good to document some experiments with DALL-E 2 and interior design while it still feels fresh.

DALL-E 2, if you don’t know, is a new text-to-image generation model that is revolutionizing digital visual media. DALL-E 2 and other similar models (like Stable Diffusion and Imagen) work the same way; they were shown millions of images from across the web and have learned what things are called and what they look like, and so can generate images of basically anything you request.

(If you’re interested in generating images from scratch, this DALL-E 2 Prompt Book is an amazing guide.)

Although it’s amazing to create images from nothing, I’m more interested in using this technology to augment existing images. This is sometimes called “inpainting” and it’s a powerful way to focus the magic of AI-generated images into real-life images that have significance to you.

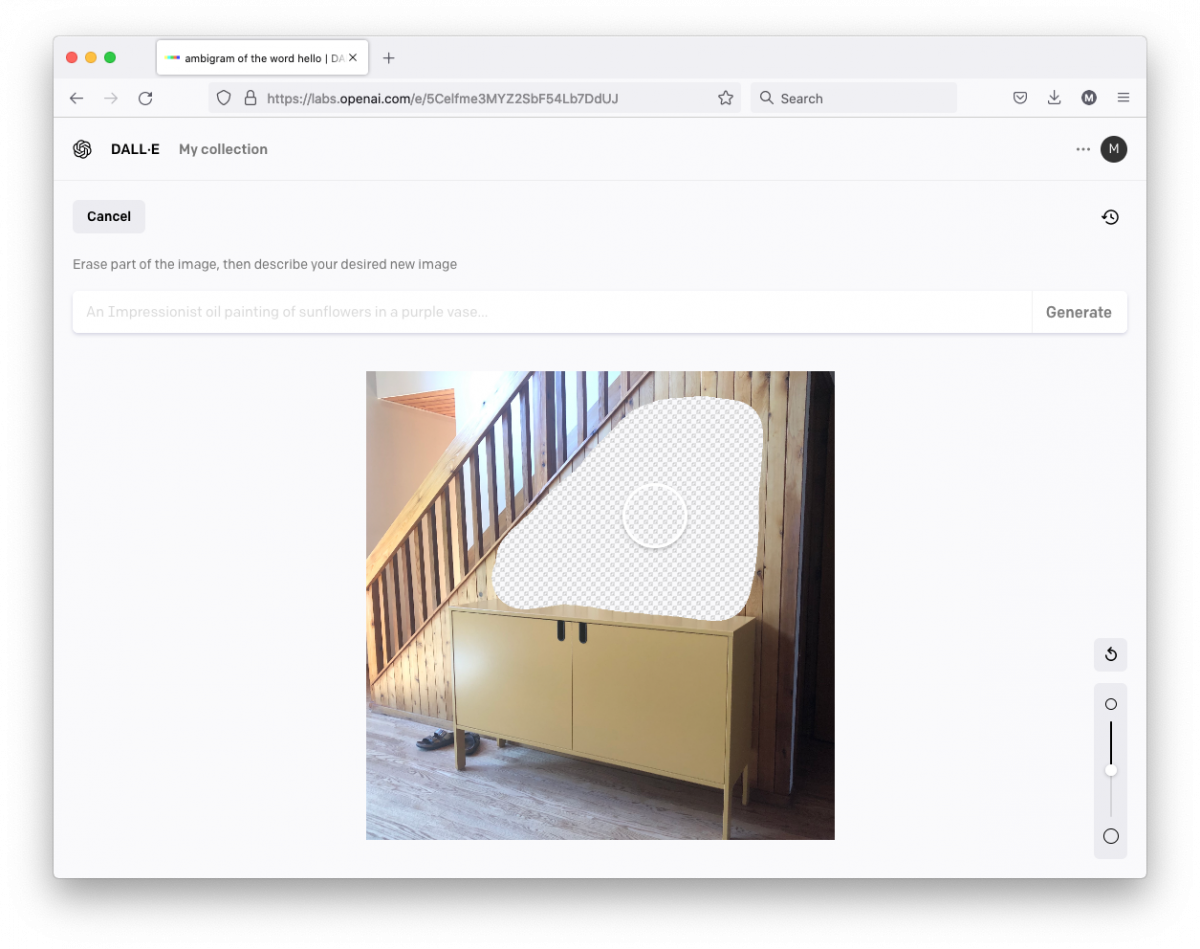

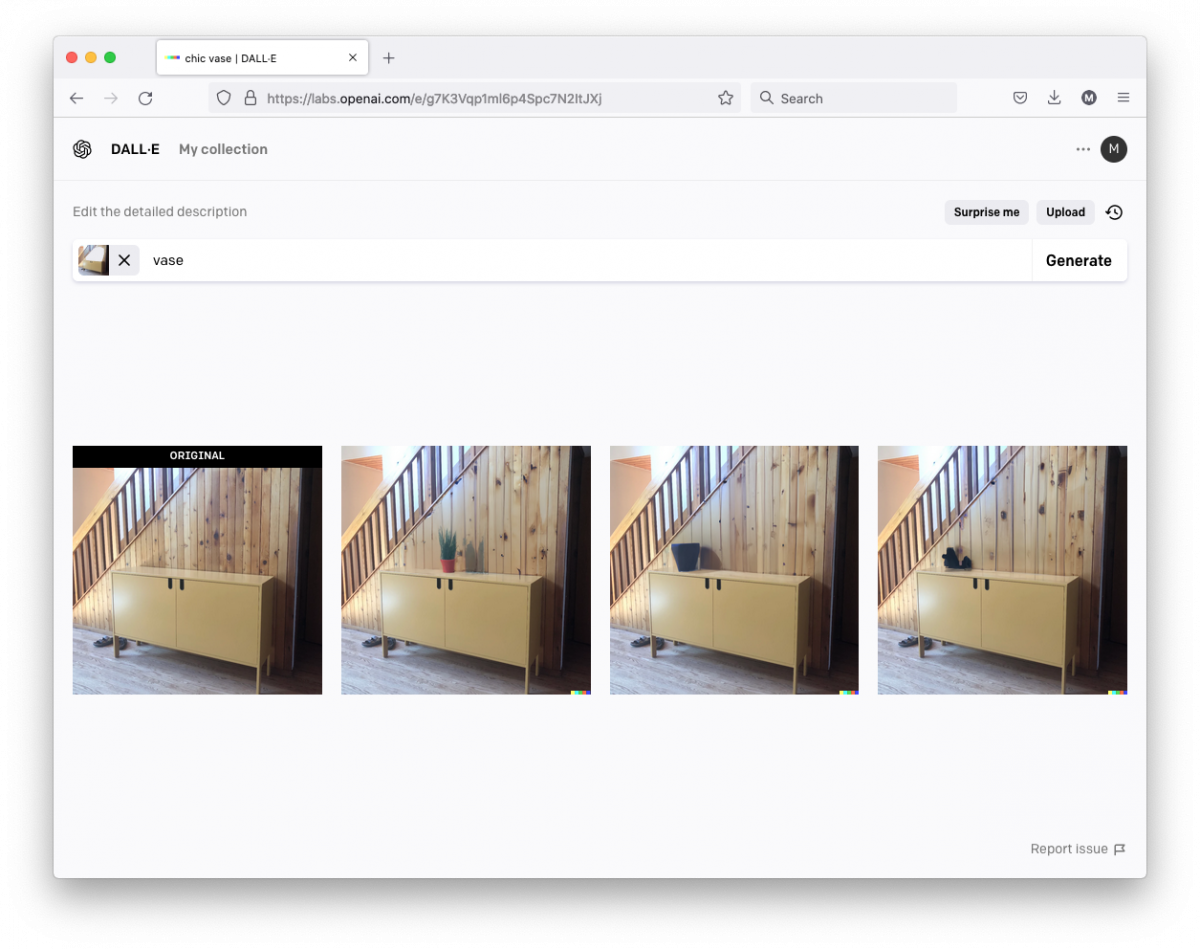

For example, I wanted to see how I could use AI to add some design flair to a newly-purchased cabinet in my dining room, so I uploaded this image to DALL-E 2 and got started.

DALL-E 2 has an interface that lets you define which areas to augment, so I hastily defined an area I wanted to decorate.

From there, you can start typing and describing what you want in your image. With inpainting, only the parts of the image you define will be augmented; the rest will be unchanged.

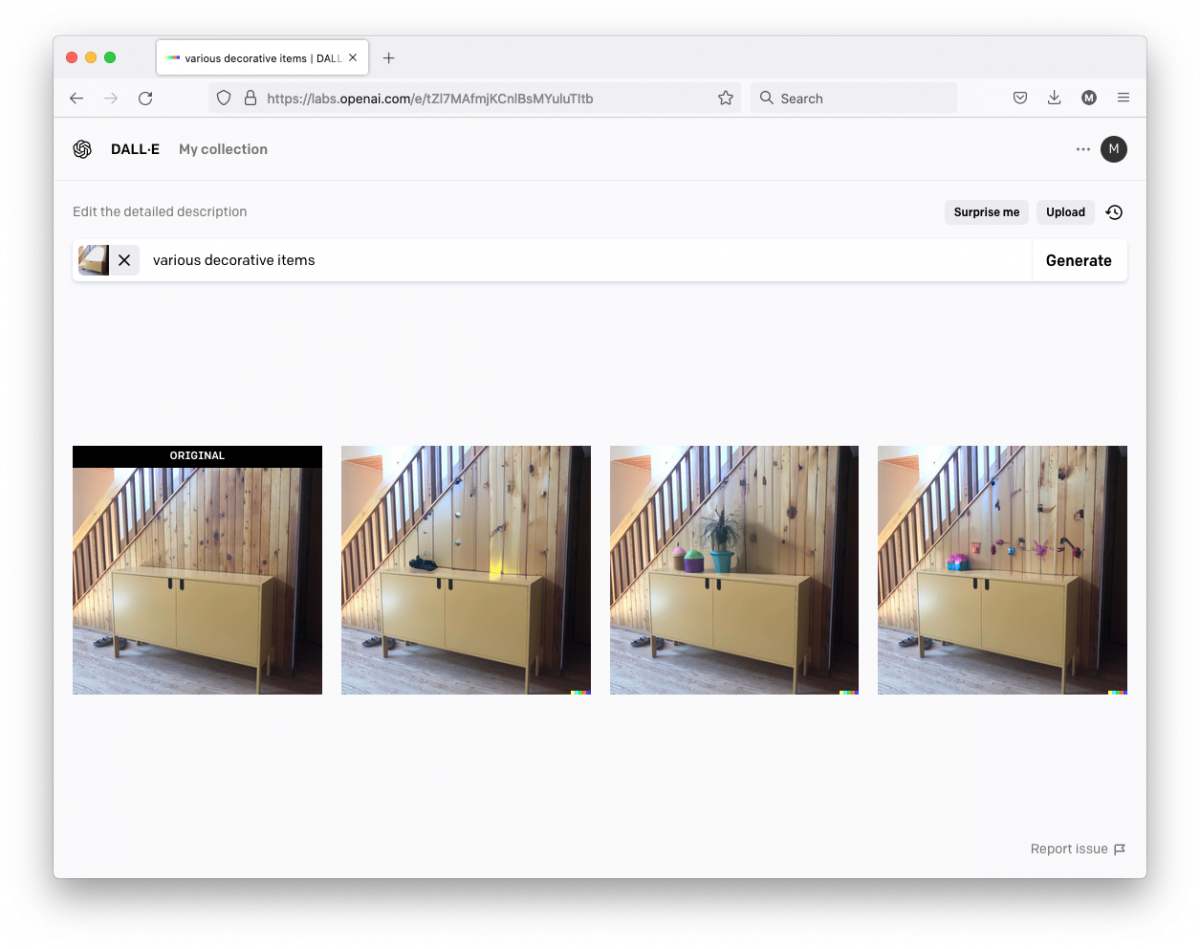

To start experimenting, I typed “various decorative items”.

Some results were better than others. But there’s potential. Next I thought to try something a little broader.

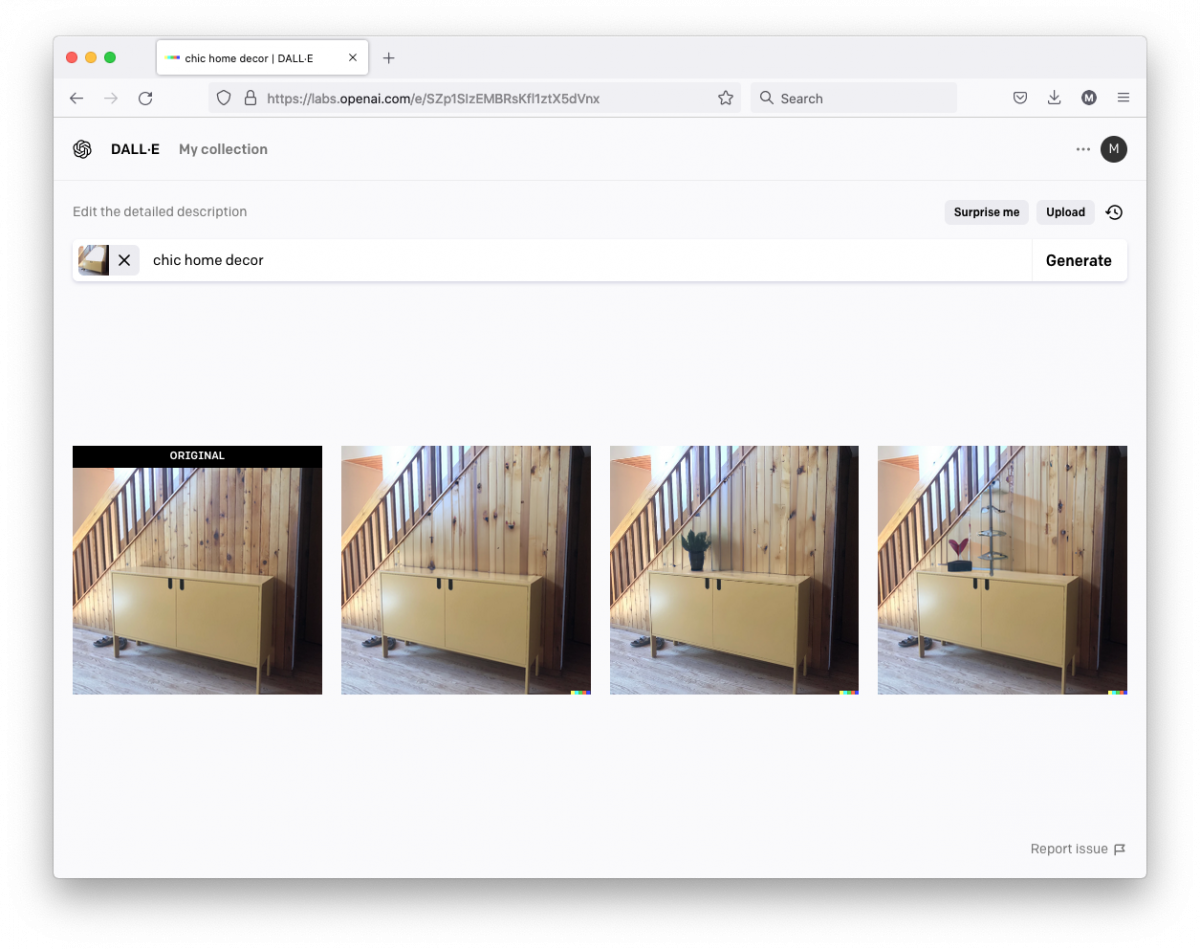

Still not much luck. So then I started getting more specific and things started looking better.

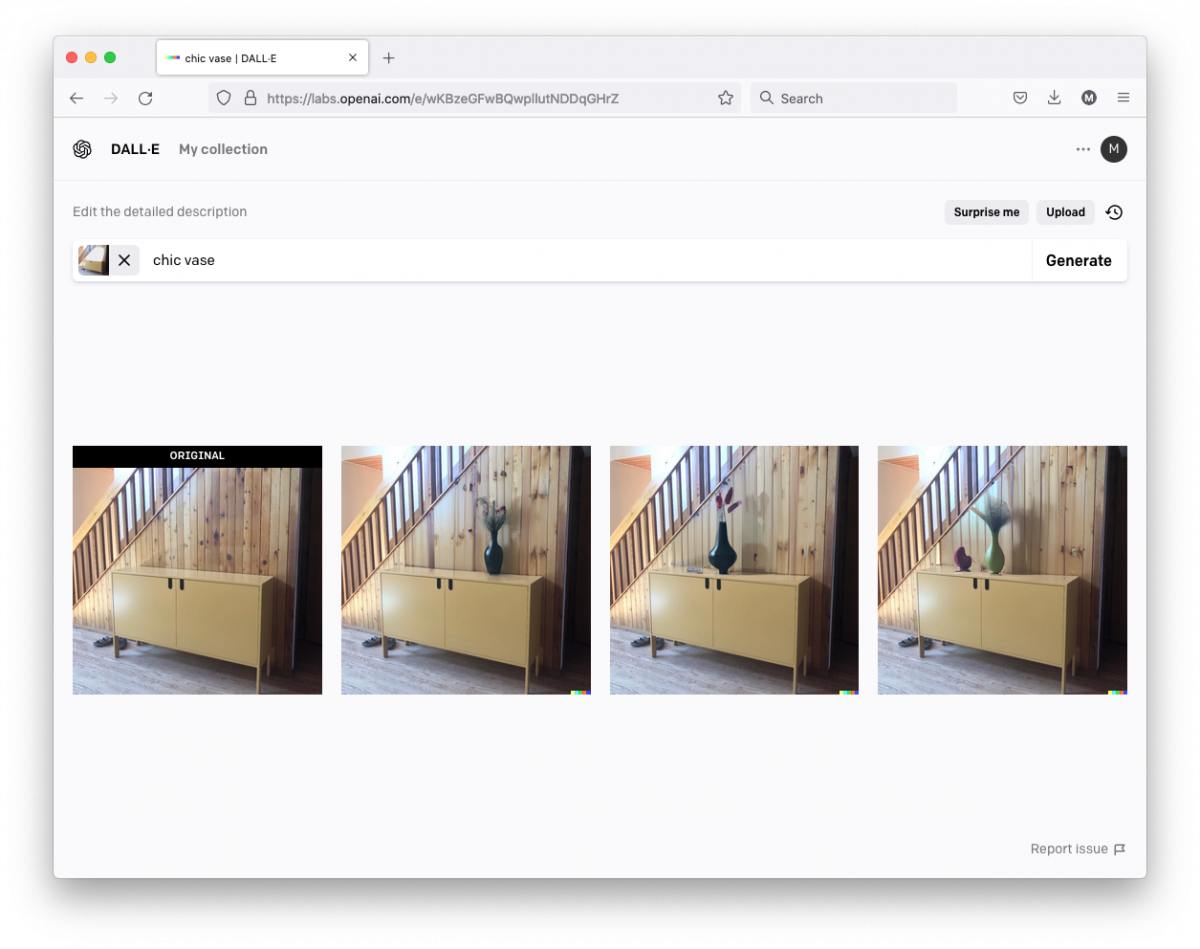

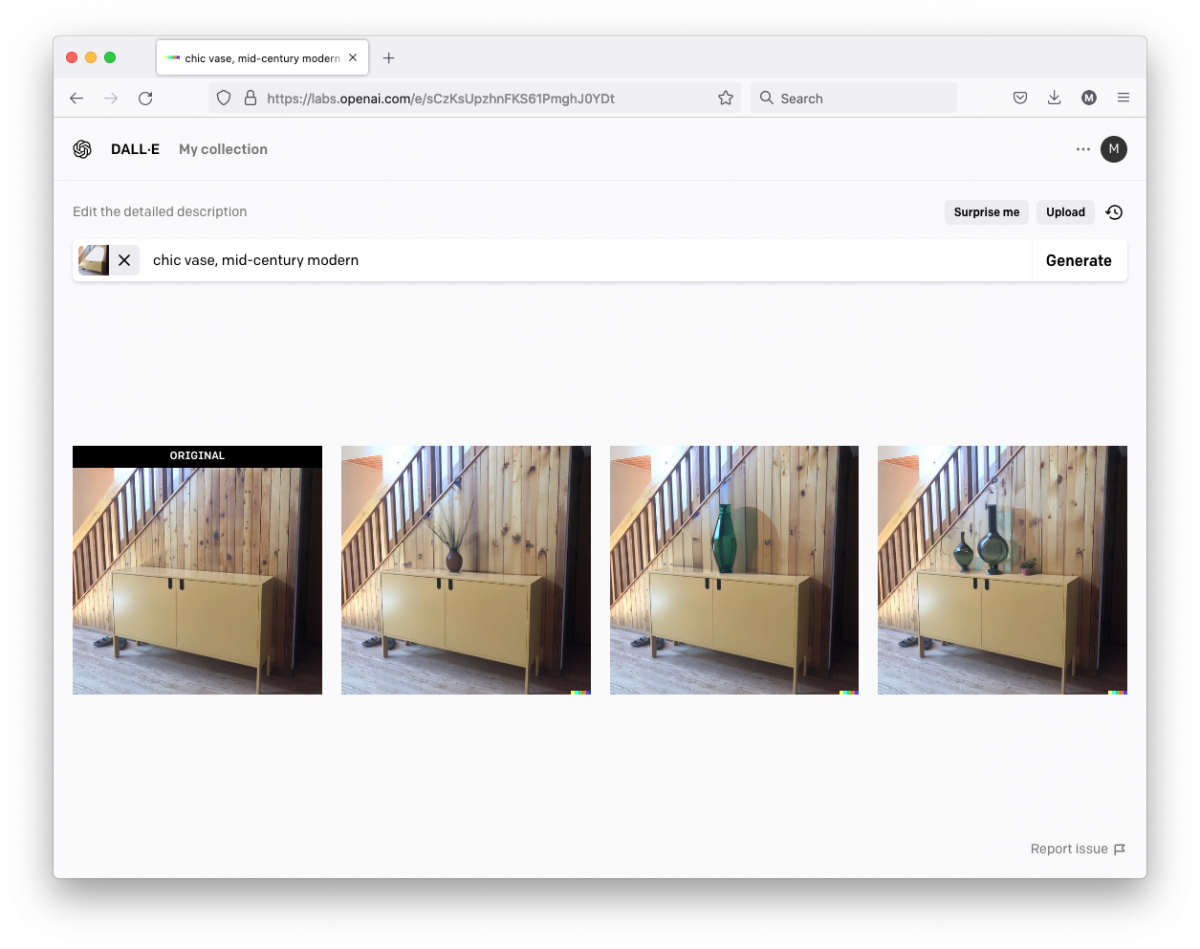

Descriptive words like “chic” definitely help, because when I tried just “vase” the results were definitely uninspired.

Next I tried some more descriptive stylistic phrases and it seemed to help.

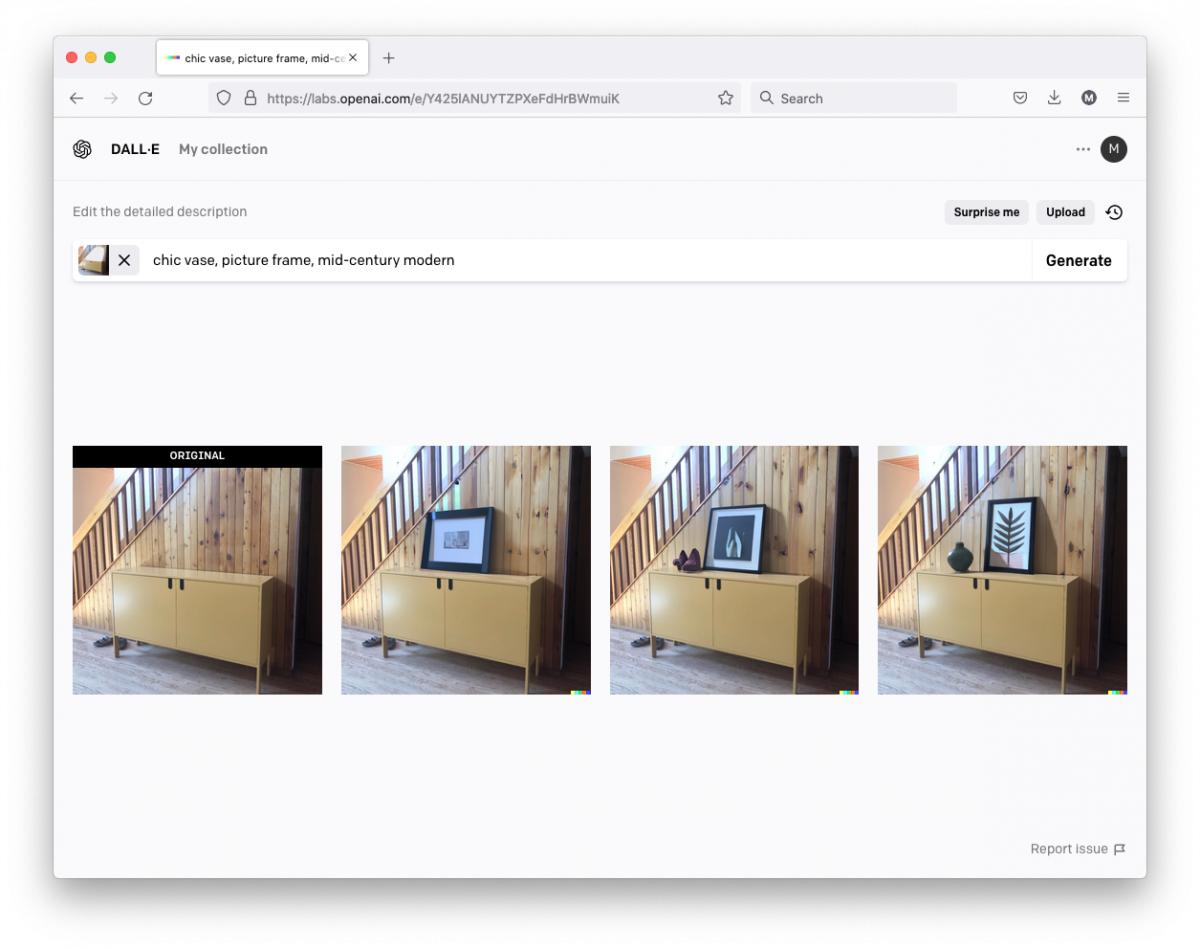

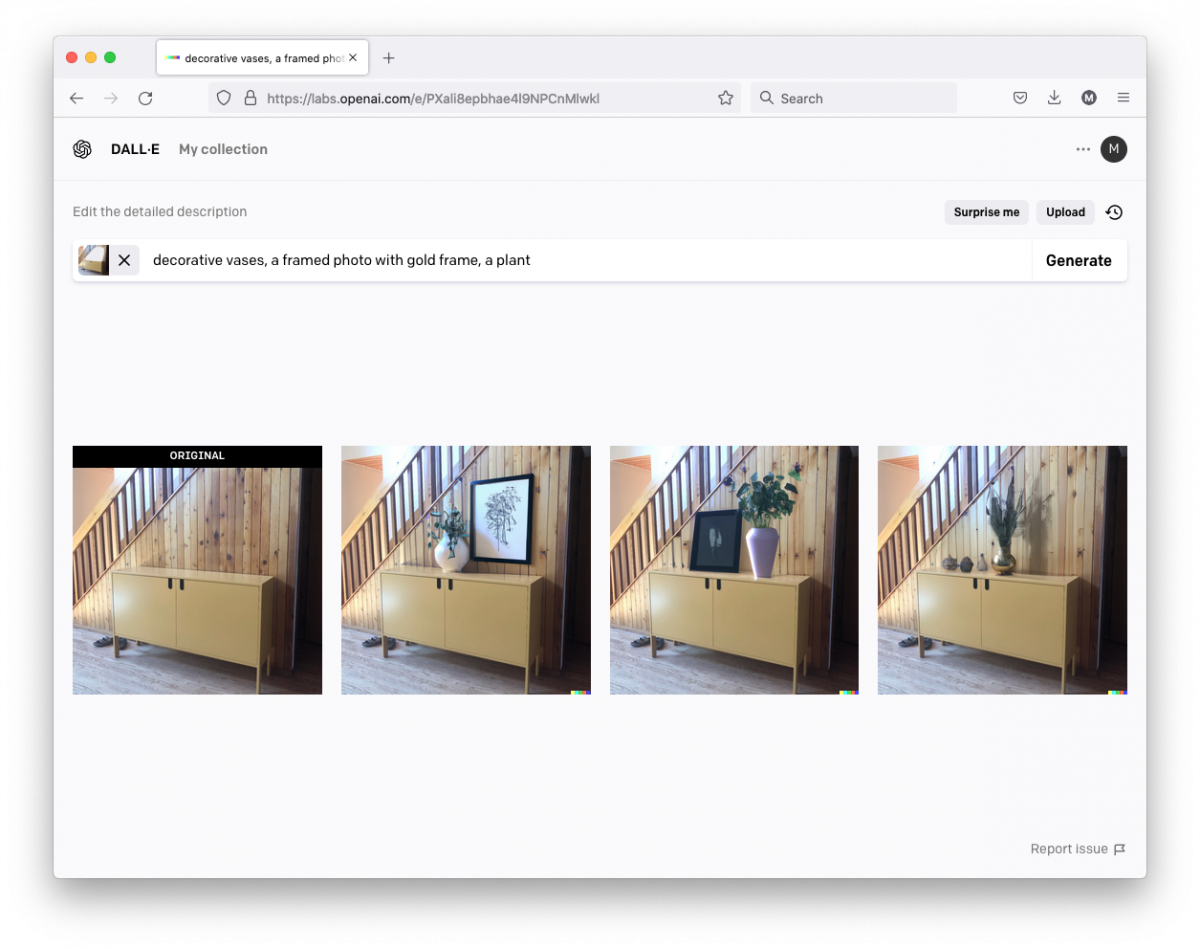

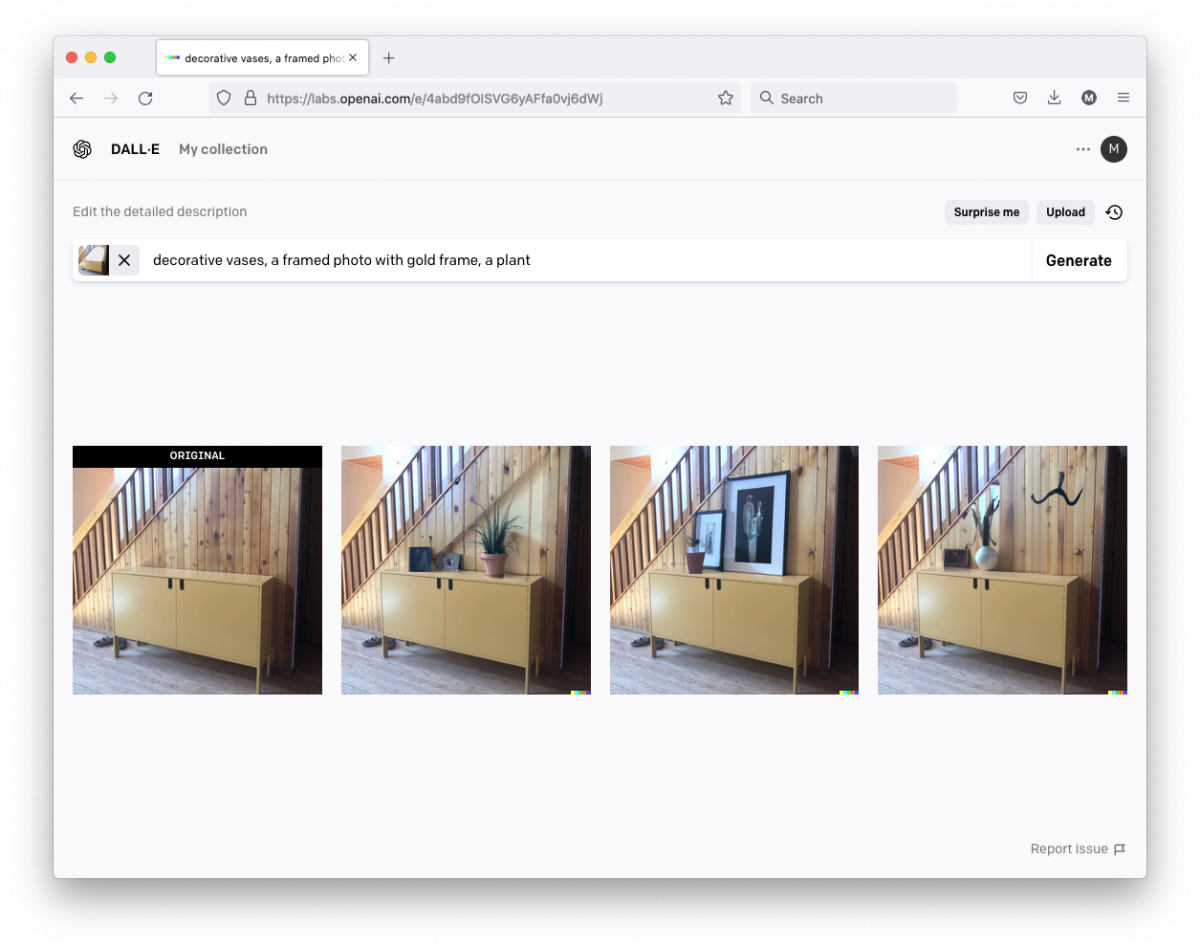

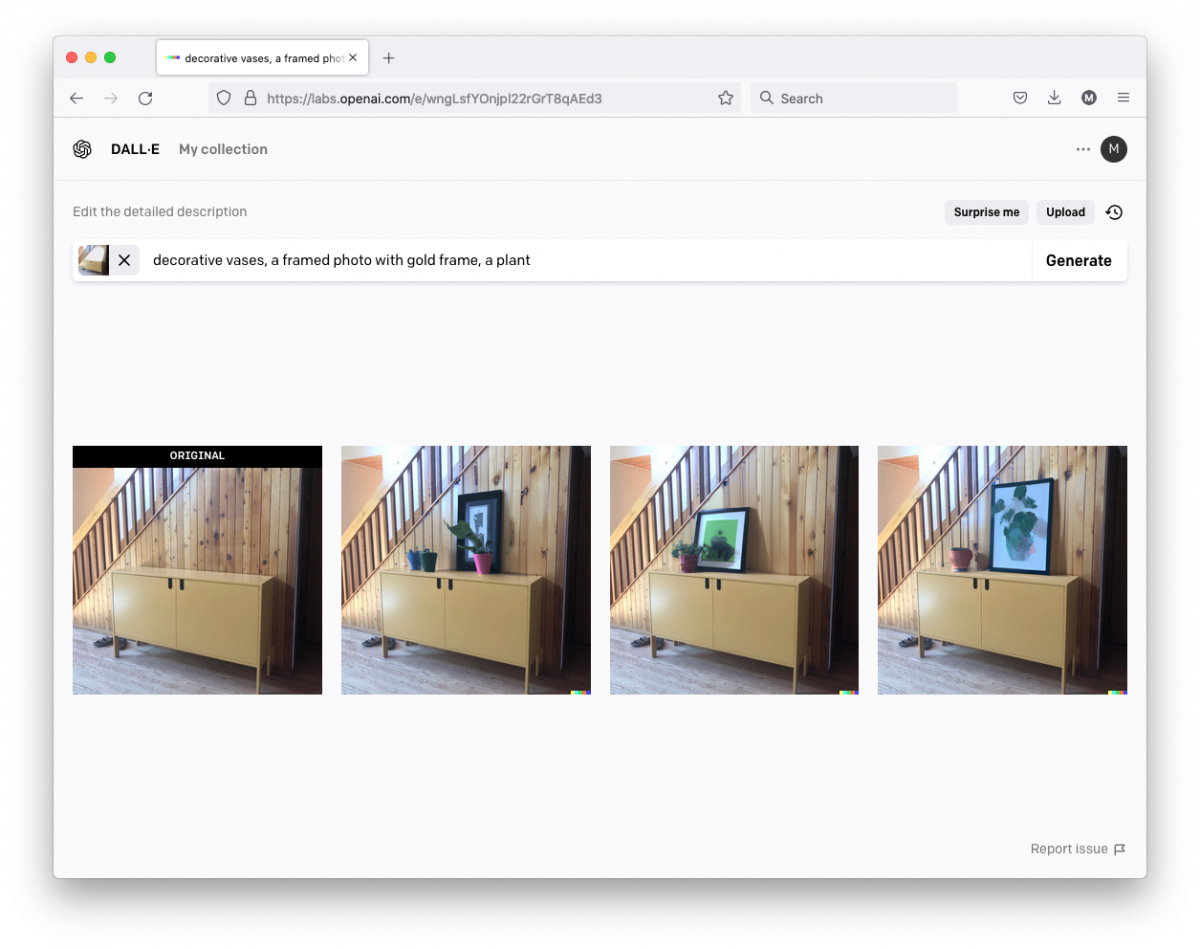

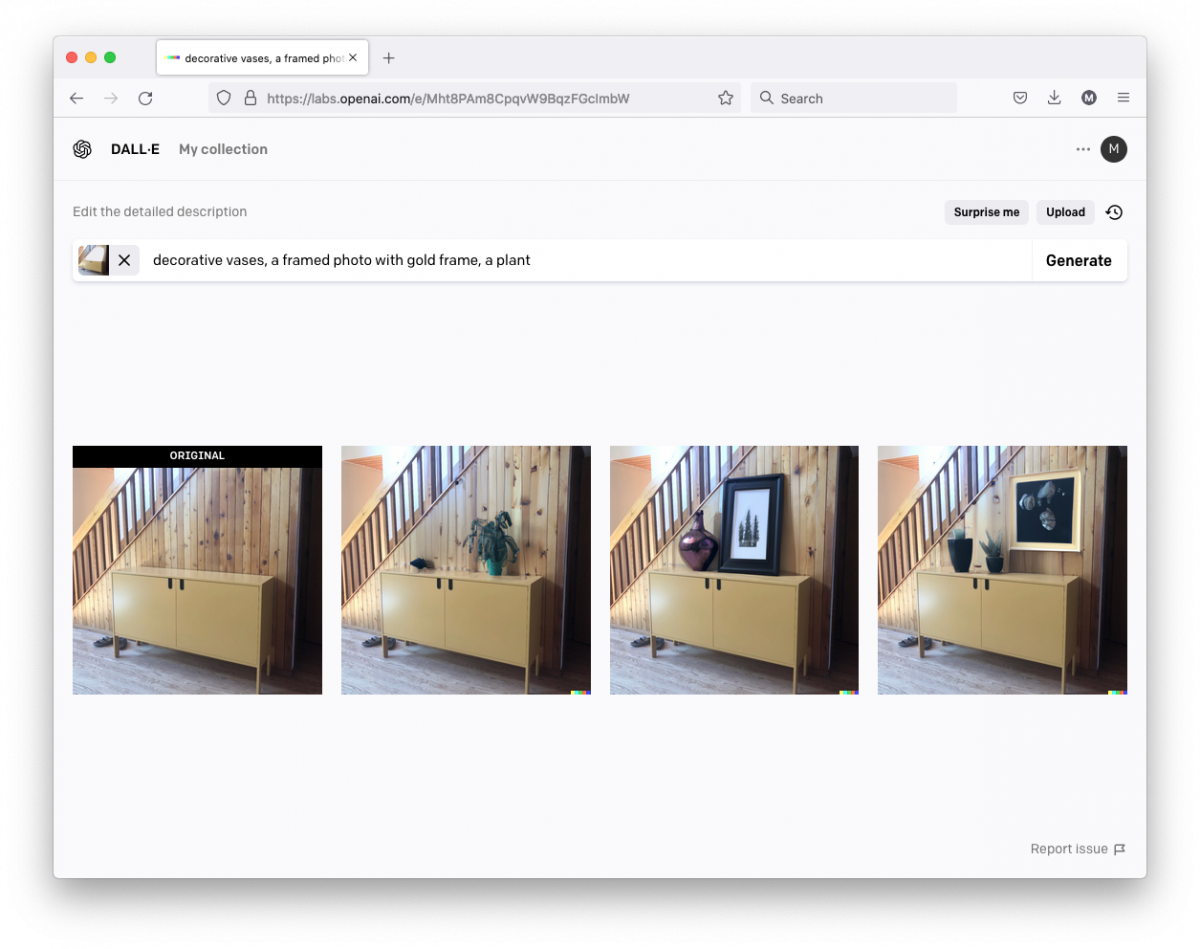

I realized I was going to need to specifically define which objects to include in the scene, so I opted to add a picture frame and a plant. After some experimentation and variations, things were starting to look pretty good…

After I found a list of objects that were working well, I just kept refreshing the same prompt and finding images that I liked…

So after a bunch of experimentation and learning what I liked and what I didn’t, I was finally ready to make a journey to Home Goods and purchase some stuff. Here’s how it turned out:

So that’s my first foray into using AI to augment the interior design process. The end result isn’t particularly breathtaking stylistically, but I found that the process of seeing a bunch of options to help define my preferences was really helpful – and kinda fun too.

(Here’s a business idea for you: A Tinder-style app for decorating your space. Upload a photo of the area you want to decorate, then swipe right/left on AI-generated images of decor options as the app learns your style preferences. Then it’ll generate affiliate links to similar products on Amazon, and you’re golden.)

Overall this process was relatively inexpensive – DALL-E 2 charges $15 for 115 credits, and each credit produces 3 images. That works out to just over $0.04 per image. I used about 100 credits during these experiments, that’s around $13 to figure out how to furnish this part of my home. Not bad compared with interior designers who charge hundreds of dollars per hour. And this stuff will only get cheaper with time, especially with Stable Diffusion recently open-sourcing their model.

If this is what one random dude can achieve with this technology, I’d love to see what an actual interior-decor specialist can achieve.

Special thanks to the phenomenal Marcha Johnson (LinkedIn, Instagram) for arranging access to DALL-E 2!